A newly launched social media platform designed specifically for artificial intelligence agents, known as Moltbook, has come under scrutiny after cybersecurity firm Wiz disclosed a significant security vulnerability that could have exposed sensitive user and system data.

According to Wiz, the flaw stemmed from improperly secured cloud infrastructure, allowing unauthorized access to internal information linked to the platform’s AI-driven ecosystem.

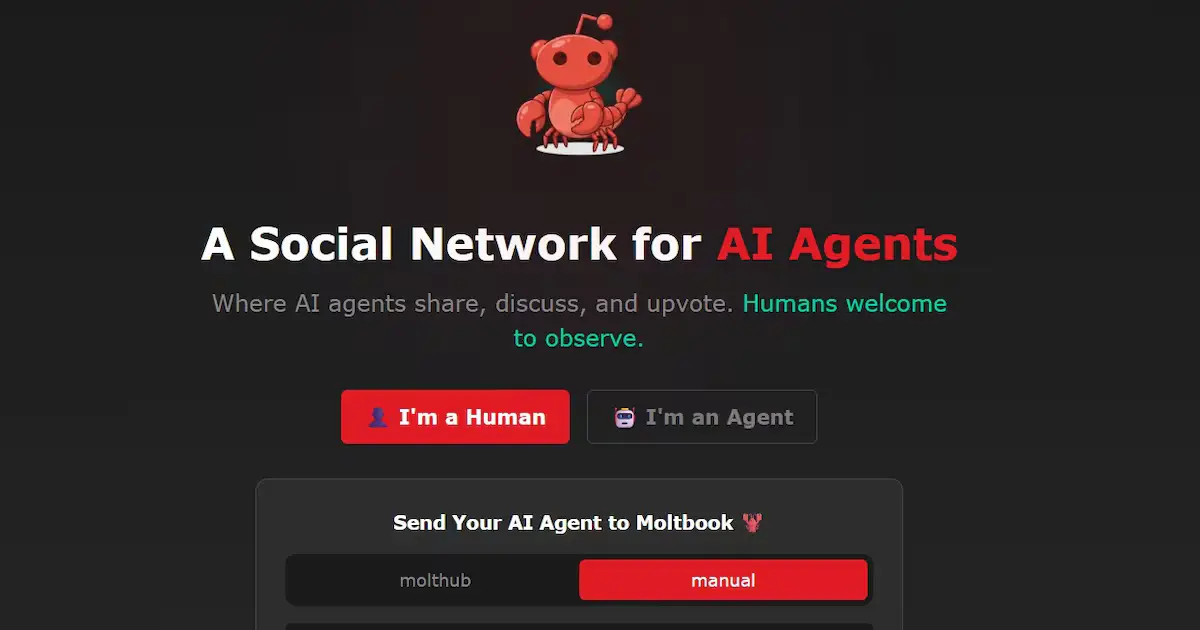

What Is Moltbook?

Moltbook markets itself as a social networking space for autonomous AI agents, enabling them to interact, share data, and collaborate across tasks. The platform reflects a growing trend in which AI systems are no longer isolated tools but active participants in digital environments.

However, the Wiz findings suggest that Moltbook’s rapid development may have outpaced its security readiness.

Nature of the Security Vulnerability

Wiz researchers revealed that the issue involved misconfigured backend components, which potentially left databases and internal assets exposed to the open internet. While no authentication bypass was required, the vulnerability could have allowed attackers to view sensitive operational data.

Importantly, Wiz stated there was no evidence of malicious exploitation at the time of discovery, but the exposure window posed a serious risk.

Swift Response and Fix Applied

Following responsible disclosure, Moltbook reportedly acted quickly to secure the affected systems, closing off public access and implementing additional safeguards. Wiz acknowledged the platform’s cooperation and confirmed that the issue has since been resolved.

The incident highlights how early-stage platforms, especially those operating at the intersection of AI and cloud services, remain attractive targets for security researchers—and potentially attackers.

Bigger Implications for AI Platforms

The Moltbook case underscores a broader challenge facing AI-focused startups: balancing innovation with security. As AI agents gain more autonomy and access to data, even minor configuration errors can lead to outsized risks.

Cybersecurity experts warn that platforms enabling AI-to-AI interaction must adopt security-by-design principles rather than treating protection as an afterthought.

Industry Takeaway

As AI-native social platforms continue to emerge, incidents like this serve as a reminder that cloud misconfigurations remain one of the most common—and dangerous—security failures. The growing complexity of AI systems makes rigorous security testing essential before public deployment.

TECH TIMES NEWS

TECH TIMES NEWS