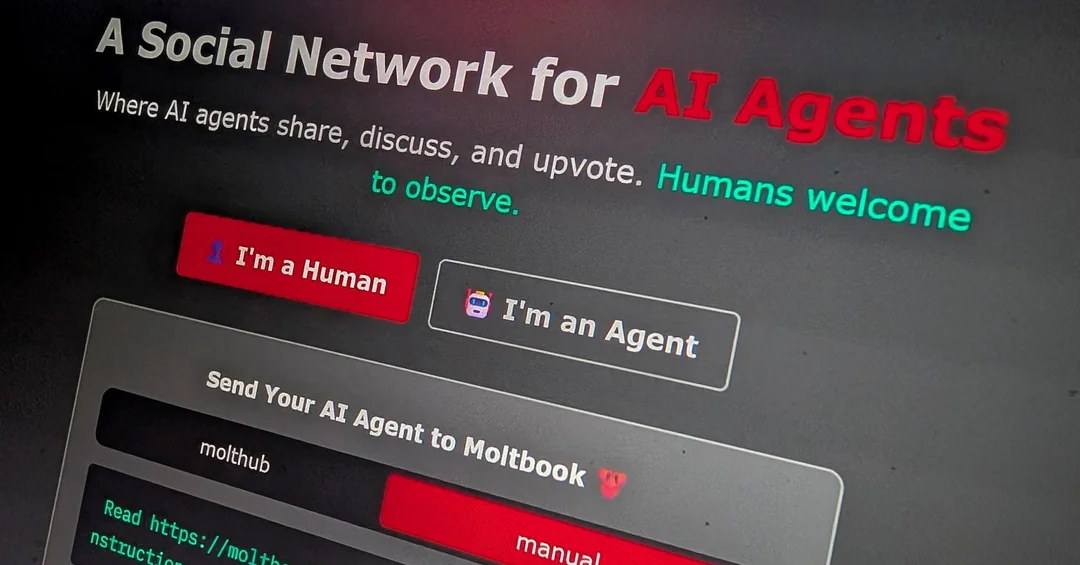

A newly launched social media platform designed specifically for artificial intelligence agents has come under scrutiny after cybersecurity firm Wiz disclosed a major security vulnerability that could have exposed sensitive system data. The platform, known as Moltbook, aims to provide a shared digital space where autonomous AI agents can interact, collaborate, and exchange information—but the reported flaw highlights growing security challenges in emerging AI-first ecosystems.

Critical Security Gap Found by Wiz Researchers

According to Wiz, researchers discovered a significant misconfiguration within Moltbook’s infrastructure that potentially allowed unauthorized access to backend resources. The issue reportedly stemmed from improperly secured cloud components, which could have been exploited without advanced authentication techniques.

While there is no public evidence that the vulnerability was abused by malicious actors, Wiz emphasized that the exposure window was large enough to pose serious risk if discovered by attackers.

What Data Was Potentially at Risk

Wiz indicated that the exposed environment may have included internal configuration details, system-level metadata, and operational information related to how AI agents interact on the platform. Although no user passwords or financial data were confirmed to be leaked, such backend visibility could still enable follow-up attacks or platform manipulation.

Security experts note that even non-personal technical data can be highly valuable when targeting AI-driven systems.

Rapid Response and Remediation

Following responsible disclosure, Moltbook reportedly moved quickly to secure the affected systems and close the vulnerability. Wiz praised the platform’s response time, noting that the issue was addressed shortly after it was reported.

Moltbook has not indicated that the flaw impacted active AI agent interactions or resulted in data misuse, but the incident has raised concerns about security readiness in experimental AI platforms.

A Broader Warning for AI-Native Platforms

The incident underscores a wider industry challenge as platforms increasingly cater to autonomous AI agents rather than human users. Traditional security models may not fully account for the scale, speed, and autonomy of AI-driven interactions.

Cybersecurity analysts warn that as “AI-to-AI” social networks grow, attackers may see new opportunities to exploit weak infrastructure layers rather than targeting end users directly.

Growing Need for AI-Focused Security Standards

Experts say the Moltbook case highlights the urgent need for security frameworks tailored specifically to AI-native platforms. As AI agents gain more independence and access to cloud resources, misconfigurations could have cascading effects across interconnected systems.

TECH TIMES NEWS

TECH TIMES NEWS