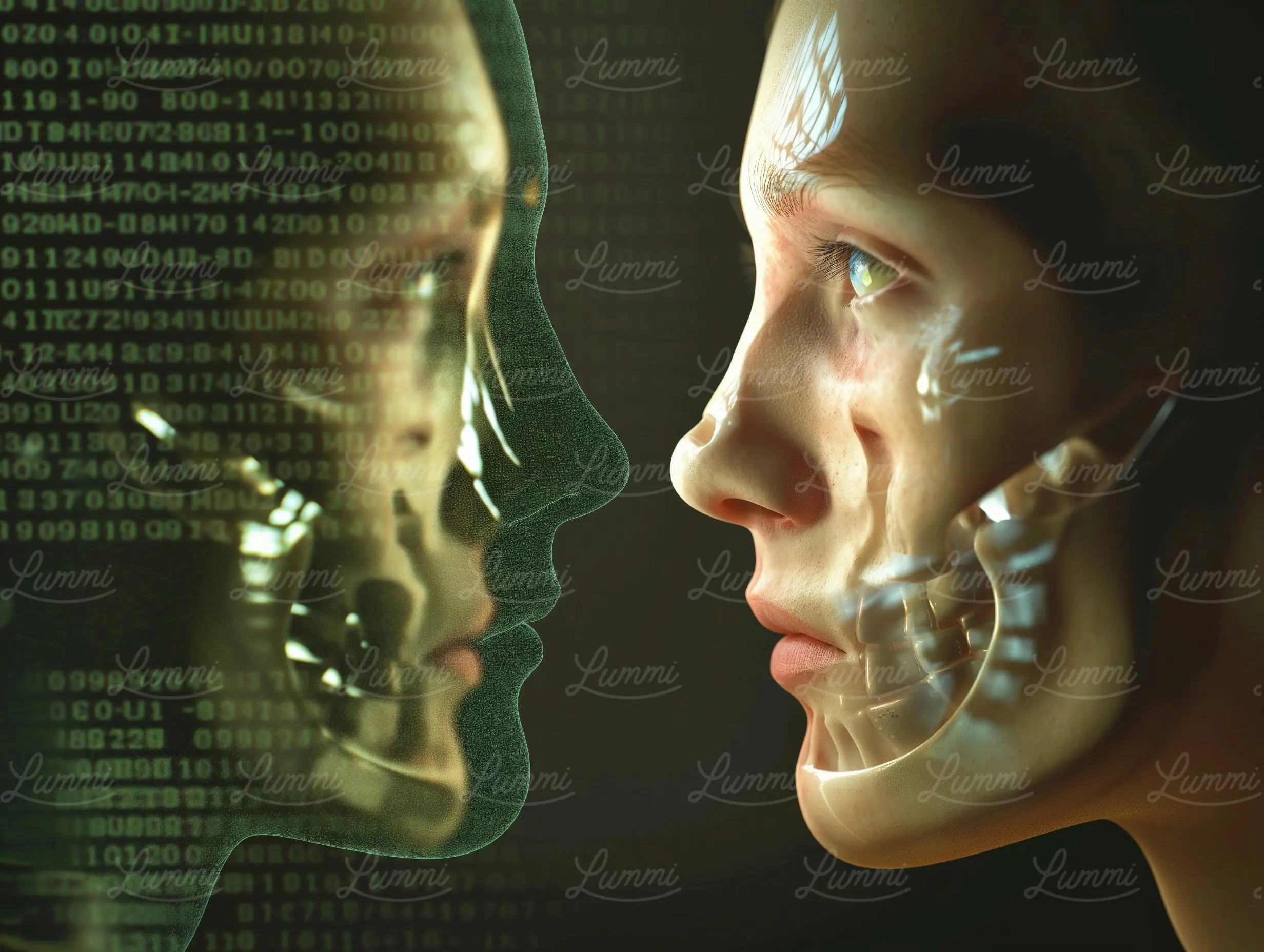

The era of hyper-realistic deepfakes has fully arrived. Thanks to open-source AI models and freely available tools, almost anyone can now create highly convincing fake videos or voice clips using minimal input—sometimes just a few seconds of a person’s face or voice. No longer restricted to tech-savvy developers, deepfake generation has become increasingly democratized, raising urgent concerns about misinformation, identity theft, and political manipulation.

AI vs. AI: The New Cybersecurity Battlefield

As deepfakes become harder to detect with the naked eye or conventional tools, fighting back now requires AI systems that are just as advanced—if not more. Companies like Microsoft, Meta, and startups such as Truepic and DeepMedia are racing to develop forensic AI tools capable of identifying altered content through subtle pixel inconsistencies, voice frequency patterns, or data provenance tracking. But experts warn: the arms race between creators and detectors may always favor the attackers.

Threat to Trust: Journalism, Politics, and Personal Lives at Risk

With deepfakes already being used in disinformation campaigns, fake pornography, and financial scams, experts caution that the line between reality and fiction is being dangerously blurred. Deepfakes targeting political figures and celebrities are already circulating widely on social platforms. Worse, personal revenge-based deepfakes have begun targeting individuals, often without legal recourse. This wave of synthetic media poses an existential threat to digital trust, especially in an election year.

AI Regulation Struggles to Keep Pace

While countries like the US, EU, and India have begun discussing or drafting regulations around AI-generated content, enforcement remains a challenge. There is little global consensus on standards for labeling or detecting deepfakes. Digital watermarking and content authenticity initiatives are underway but have yet to scale. Without swift policy action, experts fear the internet could be flooded with undetectable falsehoods.

What Can Users Do? Stay Skeptical, Stay Updated

Cybersecurity experts advise that until detection tools become widespread and accessible, public awareness is key. Fact-checking, source verification, and cautious consumption of sensational content are now essential skills. Social platforms, meanwhile, are under pressure to integrate better moderation tools and content origin verification technologies to prevent viral spread of fakes.

TECH TIMES NEWS

TECH TIMES NEWS