In a significant move aimed at curbing the misuse of artificial intelligence, the government has introduced stricter compliance requirements for online platforms regarding AI-generated and deepfake content. The new directives mandate quicker removal of flagged content and impose greater accountability on intermediaries, including social media companies and digital platforms.

The updated framework comes amid growing concerns about the rapid proliferation of manipulated videos, synthetic audio, and fabricated images that have the potential to mislead users, damage reputations, and disrupt public order.

Faster Takedown of Flagged Content Made Mandatory

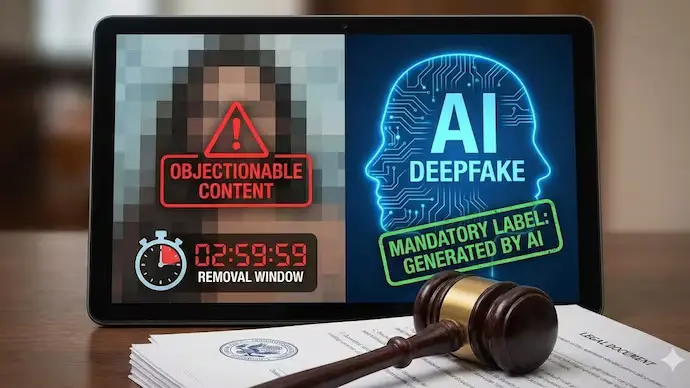

Under the revised guidelines, online platforms are required to act promptly upon receiving complaints about harmful AI-generated or deepfake content. Officials have emphasized that intermediaries must remove or disable access to such content within a defined timeframe after it is reported or flagged by authorities or affected individuals.

Failure to comply with these directions could lead to penalties, including loss of safe harbour protections under existing IT laws. The government has reiterated that platforms must ensure robust grievance redressal systems and appoint compliance officers to handle such matters efficiently.

Clear Labelling and Transparency Requirements

The new rules also stress the importance of transparency. Platforms are expected to deploy mechanisms that can identify and label synthetic or AI-generated media where feasible. Authorities have encouraged companies developing generative AI tools to incorporate safeguards that prevent misuse and watermark AI-generated outputs.

By mandating traceability and better disclosure practices, policymakers aim to reduce the spread of deceptive digital content while maintaining space for legitimate innovation.

Focus on User Safety and Public Trust

Officials stated that the measures are designed to protect users from misinformation, online impersonation, financial fraud, and reputational harm caused by deepfakes. With elections, public events, and sensitive social issues increasingly vulnerable to digital manipulation, the government has highlighted the need for responsible AI deployment.

Cybersecurity experts have welcomed the move but noted that enforcement and technological capability will determine the effectiveness of the policy. They argue that a collaborative approach between regulators, tech companies, and civil society is crucial to address the evolving nature of AI-generated threats.

Industry Response and Way Forward

Technology firms are expected to review their content moderation frameworks and AI safety protocols to comply with the tightened norms. While some industry stakeholders have expressed concerns about operational challenges and potential over-regulation, others see the move as necessary to build trust in AI systems.

TECH TIMES NEWS

TECH TIMES NEWS