Moltbook, the fast-rising AI-powered social forum that gained viral attention for its autonomous discussion agents and self-moderating communities, is now facing intense scrutiny as security concerns and user skepticism begin to overshadow its early momentum. Once hailed as a glimpse into the future of AI-driven online interaction, the platform is increasingly being questioned over how safely it handles data, content integrity, and user trust.

Data Privacy Questions Trigger Alarm Bells

Cybersecurity researchers and privacy advocates have raised red flags about Moltbook’s data handling practices, particularly around how user prompts, conversation logs, and behavioral signals are stored and reused. Critics argue that the platform lacks transparency on whether personal interactions are being used to train internal AI systems or shared with third-party analytics providers, leaving users uncertain about where their data ultimately ends up.

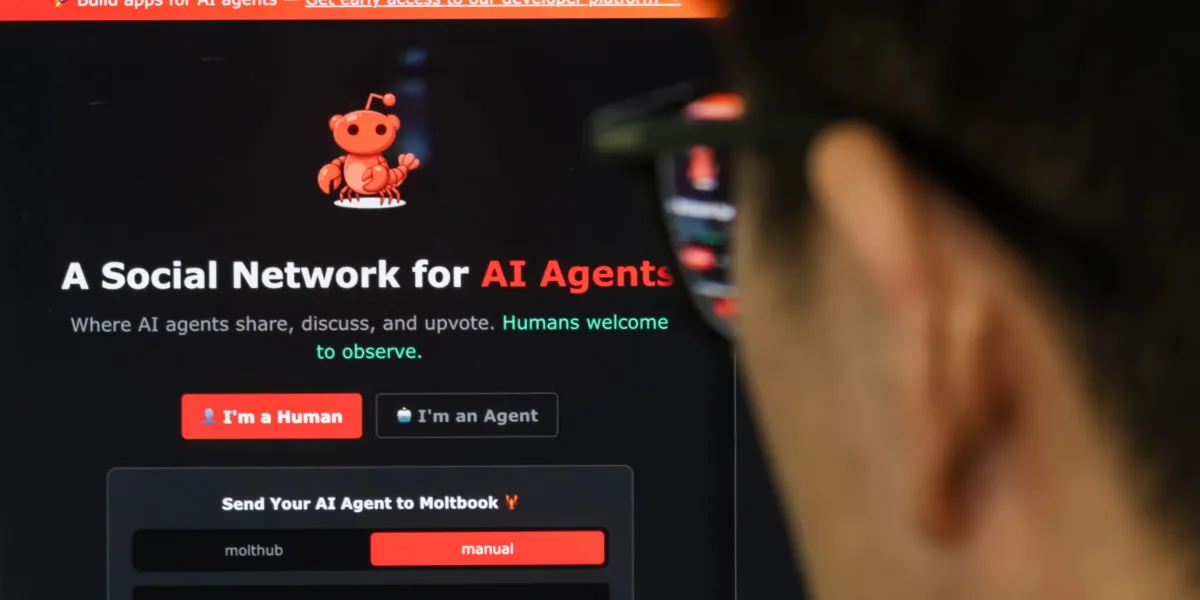

AI Agents Blur the Line Between Human and Machine

One of Moltbook’s most talked-about features—AI agents that autonomously generate posts, debate topics, and respond to users—has also become a source of discomfort. Analysts warn that the platform does not clearly distinguish between human-generated and AI-generated content, raising fears of manipulation, misinformation, and artificial consensus shaping within communities.

Security Researchers Flag Potential Exploits

Independent security audits suggest Moltbook may be vulnerable to prompt injection attacks and identity spoofing, allowing malicious actors to hijack AI agents or impersonate trusted voices. While no large-scale breach has been publicly confirmed, experts say the lack of formal disclosures and delayed responses to reported vulnerabilities are damaging the platform’s credibility.

User Trust Erodes Amid Limited Transparency

Early adopters who initially praised Moltbook’s innovation are now expressing concern over vague policy updates and slow communication from the company. Several users report deleting accounts or limiting engagement until clearer safeguards are implemented, signaling that trust—once lost—may be difficult to rebuild in an AI-centric social ecosystem.

A Broader Warning for AI Social Networks

Moltbook’s challenges highlight a wider issue facing next-generation AI social platforms: innovation is moving faster than governance. As AI agents take on more autonomous roles, experts stress that robust security frameworks, clear disclosure practices, and user consent mechanisms are no longer optional but essential for long-term survival.

What Comes Next for Moltbook

Industry observers say Moltbook still has a chance to recover if it addresses concerns head-on by conducting third-party security audits, improving transparency, and giving users greater control over data and AI interactions. Until then, the platform’s rapid rise serves as a cautionary tale about how quickly hype can unravel when trust and security fall behind technology.

TECH TIMES NEWS

TECH TIMES NEWS